The process of hearing is a marvel of biological engineering, involving intricate mechanisms that convert sound waves into electrical signals interpreted by the brain. Over the years, scientists have proposed various models to explain how hearing works, each shedding light on different aspects of this complex process.

In this article, we will look at the various existing models that explain the fascinating experience of sound perception.

Transmission Model

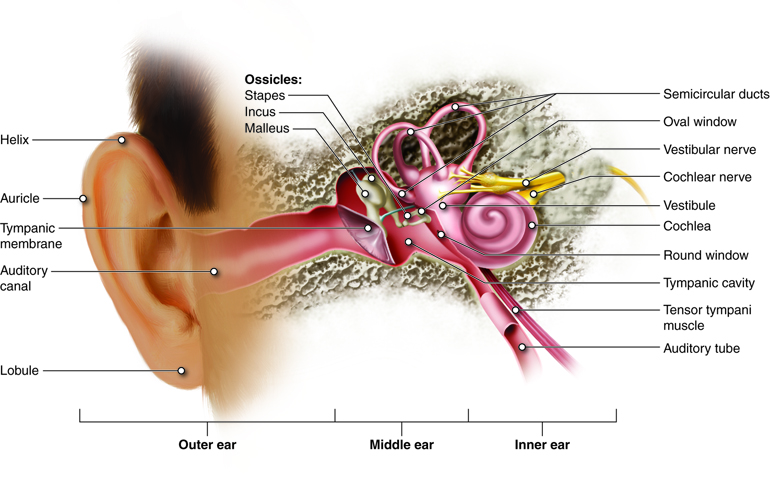

The transmission model of hearing, also known as the mechanical model, outlines the basic pathway of sound waves from the external environment to the brain. It begins with the outer ear collecting sound waves and channelling them through the ear canal to the eardrum, causing it to vibrate. These vibrations are then transmitted to the middle ear, where the ossicles—three tiny bones (the hammer, anvil, and stirrup)—amplify and transmit the vibrations to the inner ear. Finally, the cochlea, a spiral-shaped structure filled with fluid and lined with tiny hair cells, converts these mechanical vibrations into electrical signals that are sent to the brain via the auditory nerve.

Let’s delve deeper into each stage of this model to appreciate its complexity:

- Sound Reception: Sound transmission begins with the reception of sound waves by the outer ear, also known as the pinna (the visible, cartilage part of the ear that can be touched). The pinna helps to localize and funnel sound waves into the ear canal, where they travel towards the eardrum.

- Sound Amplification: As sound waves reach the eardrum, they cause it to vibrate. These vibrations are then transmitted through the ossicles—three small bones in the middle ear: the malleus (hammer), incus (anvil), and stapes (stirrup). The ossicles form a chain that amplifies the vibrations received by the eardrum before transmitting them to the inner ear.

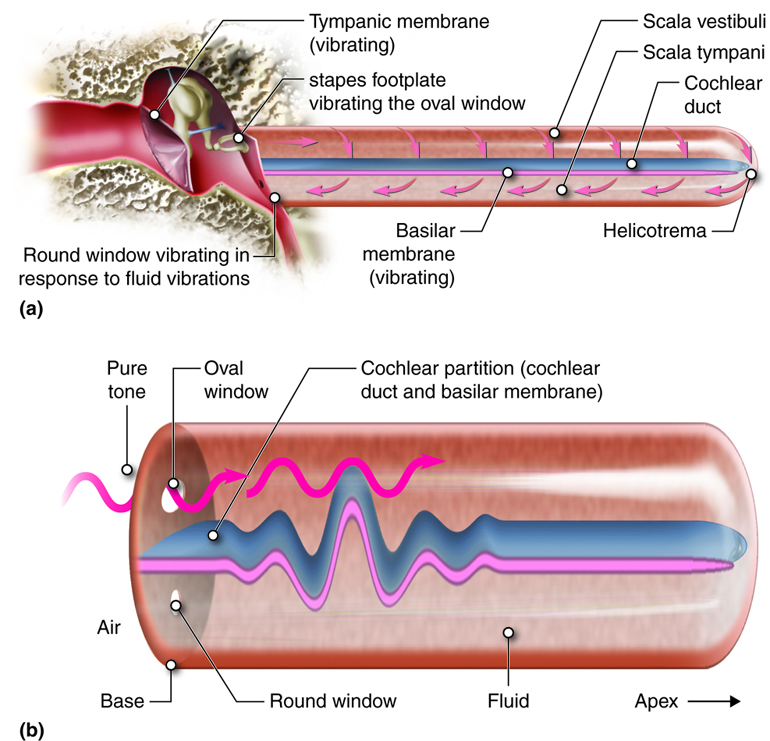

- Transfer of Mechanical Energy: The stapes, the last bone in the ossicular chain, connects to the oval window, a membrane-covered opening in the cochlea—a fluid-filled, spiral-shaped structure in the inner ear. The movement of the stapes against the oval window transfers mechanical energy from the ossicles to the fluid within the cochlea.

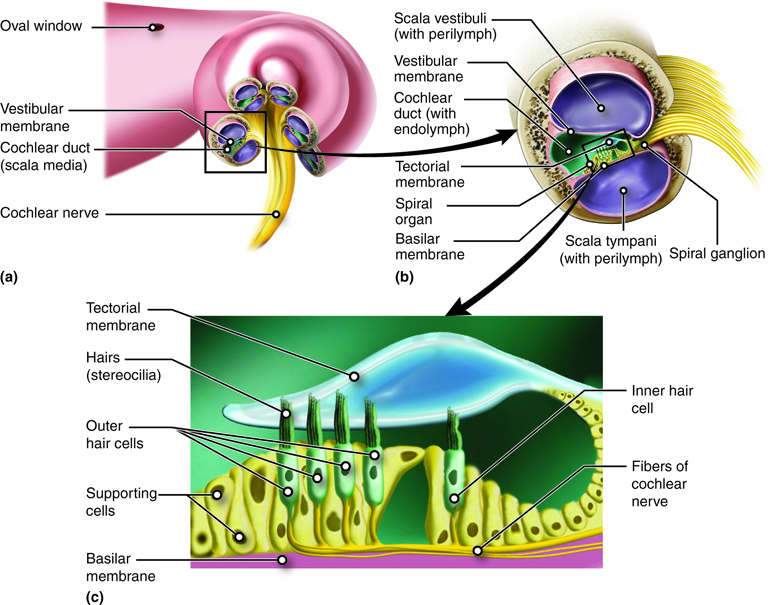

- Hair Cell Stimulation: Within the cochlea, the mechanical energy from the fluid movement stimulates specialized sensory cells called hair cells (organ of Corti). These hair cells are lined along the basilar membrane, a structure that runs the length of the cochlea and varies in stiffness from base to apex. As the fluid waves move through the cochlea, they cause the basilar membrane to flex, bending the hair cells and initiating neural signalling.

- Neural Transmission: The bending of hair cells triggers the release of neurotransmitters, which in turn activate adjacent auditory nerve fibres. These fibres, bundled together in the auditory nerve, transmit electrical signals—encoded representations of sound frequency and intensity—to the brainstem and then to higher auditory centres in the brain, such as the auditory cortex.

While this model offers a foundational understanding of sound transmission, it is important to remember that, as research progresses, further insights into the nuances of auditory processing continue to refine our understanding of how we perceive and interpret sound.

Place Theory

Proposed by German physicist Hermann von Helmholtz in the 19th century, the Place Theory suggests that different frequencies of sound waves are detected by specific locations along the basilar membrane within the cochlea, resulting in the perception of different pitches.

High-frequency sounds cause maximum displacement of the basilar membrane near the base of the cochlea, while low-frequency sounds displace it more toward the apex. This spatial distribution of activation is then translated into our perception of pitch.

The mechanical transmission of sound from the outside to the inside of the ear is the same as outlined in the Transmission Model.

Here are the main aspects of the Place Theory:

- Anatomy of the Cochlea: To understand the Place Theory, it’s essential to grasp the structure of the cochlea. The cochlea is a spiral-shaped, fluid-filled organ located in the inner ear. Within the cochlea runs the basilar membrane, which is narrower and stiffer at the base (near the oval window) and wider and more flexible towards the apex (farthest from the oval window).

- Frequency Mapping: According to the Place Theory, different frequencies of sound waves cause maximum displacement of the basilar membrane at specific locations along its length. High-frequency sounds lead to maximum displacement near the base of the cochlea. Conversely, low-frequency sounds cause maximum displacement closer to the apex. This mapping of frequencies is called also Tonotopic Organisation.

- Hair Cell Stimulation: Hair cells, the sensory receptors within the cochlea, are responsible for converting mechanical vibrations into neural signals. When the basilar membrane vibrates in response to sound waves, it causes the hair cells to bend. This bending stimulates the hair cells to generate neural impulses, which are then transmitted via the auditory nerve to the brain. By analysing the pattern of hair cell activation along the basilar membrane, the brain can accurately perceive the frequency of incoming sound waves.

Limitations and Modifications: While the Place Theory initially provided a compelling explanation for pitch perception, subsequent research has revealed its limitations. Specifically, it does not fully account for how the brain processes frequencies beyond the range of individual hair cells. To address this, modifications to the theory, such as the Volley Theory and the Frequency Theory, have been proposed to explain how the auditory system encodes higher frequencies.

Frequency Theory

The frequency theory, also known as the temporal theory, suggests that the perception of pitch is based on the frequency of neural impulses travelling up the auditory nerve. According to this model, the rate at which the auditory nerve fires matches the frequency of the sound wave. Therefore, lower frequencies correspond to slower rates of neural firing, while higher frequencies correspond to faster rates. However, there were challenges in explaining how neurons could fire at such high rates, leading to a refinement of this theory (see next).

Here are the key points of the Frequency Theory:

- Firing Rate and Frequency Perception: At the heart of the Frequency Theory is the idea that the rate at which neurons fire in response to sound waves directly corresponds to the frequency of the sound. In other words, the faster a neuron fires, the higher the perceived pitch of the sound, and vice versa. For example, a 100 Hz sound wave would lead to auditory nerve fibres firing at a rate of 100 action potentials per second.

- Auditory Nerve Encoding: As sound waves cause the basilar membrane to vibrate, hair cells within the cochlea are stimulated, leading to the generation of neural impulses. These impulses are then transmitted via the auditory nerve to the brainstem and auditory cortex for processing. According to the Frequency Theory, the rate of neural firing in the auditory nerve reflects the frequency of the incoming sound wave.

- Phase Locking: A key mechanism supporting the Frequency Theory is phase locking, which refers to the synchronization of neural firing with the phase of a sound wave. When a sound wave oscillates, neurons in the auditory system synchronize their firing with the peaks and troughs of the wave, allowing them to accurately encode its frequency. Phase locking is particularly effective for lower frequencies, where neurons can follow the rapid changes in the waveform.

Limitations and Challenges: While the Frequency Theory provides a straightforward explanation for how the auditory system encodes low-frequency sounds, it faces challenges when it comes to higher frequencies. Neurons have a minimum time interval between action potentials (i.e. firings or impulse transmission) called the refractory period, making it physiologically impossible for them to fire at frequencies above a certain threshold (typically around 1,000-4,000 Hz). As a result, the Frequency Theory alone cannot account for how the brain processes higher frequencies.

Integration with Place Theory: To address the limitations of the Frequency Theory, researchers propose a hybrid model known as the Volley Theory, which combines elements of both the Frequency Theory and the Place Theory.

Volley Theory

The volley theory proposes a compromise between the place theory and the frequency theory. Developed in the early 20th century by Georg von Békésy, it suggests that neurons in the auditory nerve alternate firing in sequence to achieve higher combined firing rates than what individual neurons can achieve. This coordinated firing pattern, or “volley,” allows the brain to accurately encode the frequency of sound waves up to a certain limit, typically around 4,000 Hz.

Here’s a deeper explanation of the Volley Theory:

- Neural Synchronization: At the core of the Volley Theory is the idea that auditory nerve fibres can work together in a synchronized manner to encode higher frequencies of sound. Instead of each individual neuron firing at the same frequency as the incoming sound wave, groups of neurons alternate their firing to collectively match the frequency of the sound.

- Temporal Coding: Unlike the Frequency Theory, which relies solely on the firing rate of individual neurons, the Volley Theory introduces the concept of temporal coding. Temporal coding refers to the precise timing of neural impulses relative to the phase of the sound wave. By synchronizing their firing patterns with the peaks and troughs of the sound wave, auditory nerve fibres can encode the frequency of the sound more accurately.

- Phase Locking and Volley Principle: Like the Frequency Theory, the Volley Theory builds upon the concept of phase locking. However, instead of relying solely on individual neurons to follow the entire waveform, the Volley Principle suggests that groups of neurons alternate their firing in a coordinated manner. For example, while some neurons fire during the peaks of the wave, others fire during the troughs, allowing them to collectively encode frequencies beyond the limit of individual neurons.

- Frequency Range Extension: By coordinating their firing patterns, auditory nerve fibres can effectively extend the frequency range over which the auditory system can process sound. This mechanism enables the brain to perceive frequencies well beyond the limits predicted by the Frequency Theory, which relies on the maximum firing rate of individual neurons.

In summary, the Volley Theory offers a compelling explanation for how the auditory system encodes higher frequencies of sound through the coordinated firing of auditory nerve fibres. By introducing the concept of temporal coding and collective neural responses, it expands our understanding of auditory perception and complements the other existing models.

Bone Conduction according to Tomatis

Alfred Tomatis, a French otolaryngologist, introduced the concept of bone conduction as a crucial element in the process of hearing. Tomatis proposed that the vibrations produced by sound waves are not only transmitted through the air in the ear canal, but also through the bones of the skull, particularly the temporal bones. This bone conduction mechanism allows for the direct stimulation of the cochlea, bypassing the outer and middle ear.

Tomatis’ work emphasized the importance of bone conduction in auditory processing, particularly in situations where air conduction is compromised, such as in cases of conductive hearing loss. He developed auditory training programs based on the principles of bone conduction to help individuals improve their auditory processing skills and address various auditory challenges.

In this model, bone conduction complements air conduction, providing additional pathways for sound transmission and contributing to the overall perception of sound.

Electromodel of the Ear by Pohlman

The electromodel of the ear, proposed by David T. Pohlman, offers a unique perspective on auditory processing by emphasizing the role of electrical phenomena within the cochlea. Pohlman’s model suggests that in addition to the well-established mechanical processes involved in hearing, such as the vibration of the ossicles and the movement of hair cells, there exists a significant electrical aspect to auditory transduction.

In this electromodel, sound waves, upon reaching the cochlea, not only cause mechanical vibrations but also induce changes in the electrical properties of cochlear tissues. These changes in electrical potential occur within the hair cells, which are the primary sensory receptors responsible for converting mechanical stimuli into neural signals and modulate the release of neurotransmitters at the synapses between hair cells and auditory nerve fibres, facilitating the conversion of mechanical stimuli into neural signals.

By emphasasing the role of bioelectric phenomena in auditory transduction, Pohlman’s electromodel suggests that disruptions in these electrical processes (whether due to genetic mutations, environmental factors, or aging) may contribute to auditory disorders and hearing impairments.

Auditory Scene Analysis (ASA)

I’d like to close this presentation of the various models of hearing with the addition of the Auditory Scene Analysis (ASA), which is a step further in the process and does not focus on how sounds reach the brain but on what happens when they do.

Auditory Scene Analysis is a cognitive process that enables us to perceive and understand complex auditory environments by organizing and segregating sound sources into distinct perceptual streams or objects. Proposed by psychologist Albert Bregman in the 1990s, ASA provides a framework for how the human auditory system parses and makes sense of the mixture of sounds present in everyday listening situations.

Here’s a glance at Auditory Scene Analysis and its key concepts:

- Auditory Grouping: Auditory Grouping refers to the process by which the auditory system groups together sounds that share similar acoustic properties, such as pitch, timbre, spatial location, and temporal onset. These perceptual groupings help organize individual sound elements into coherent auditory objects, making it easier for the brain to process and interpret the auditory scene.

- Auditory Streaming: Auditory Streaming occurs when the auditory system separates a mixture of sound sources into distinct perceptual streams based on differences in their acoustic attributes. For example, when listening to a conversation in a noisy room, we can focus on the voice of a particular speaker while simultaneously segregating and ignoring background noise. This ability to attend to specific streams of sound while filtering out irrelevant information is essential for effective communication and auditory comprehension.

- Auditory Segregation: Auditory Segregation involves the separation of overlapping or concurrent sound sources into distinct auditory objects. This process relies on various auditory cues, such as spatial location, temporal onset, spectral content, and continuity/discontinuity of sound. By analyzing these cues, the auditory system can distinguish between different sound sources and allocate attention to relevant information.

- Auditory Grouping Cues: Auditory grouping cues help facilitate the organization of sound elements into perceptual groups. These cues include:

- Proximity: Sounds that occur close together in time or space are likely to be grouped together.

- Similarity: Sounds that share similar acoustic properties are grouped together.

- Continuity: Sounds that follow a smooth, continuous trajectory are perceived as belonging to the same auditory object.

- Common Fate: Sounds that move together in a coordinated manner are grouped together.

- Temporal Coherence: Sounds that have similar temporal patterns or rhythms are grouped together.

- Implications for Perception and Communication: Auditory Scene Analysis plays a crucial role in our everyday listening experiences, influencing how we perceive and interact with our auditory environment. It allows us to navigate complex acoustic environments, such as crowded social gatherings or urban street scenes, by selectively attending to relevant sound sources and filtering out distractions. Additionally, ASA contributes to our ability to understand speech in noisy environments, detect environmental sounds, and localize sound sources in space.

- Neural Mechanisms: Research suggests that Auditory Scene Analysis involves the coordinated activity of multiple brain regions, including the auditory cortex, frontal cortex, and parietal cortex. These regions work together to process and integrate incoming auditory information, extract relevant features, and generate perceptual representations of auditory scenes.

Auditory Scene Analysis helps explain how our brain organizes and makes sense of the sounds around us. It shows how we separate different sounds, group them together, and interpret complex auditory scenes. By understanding these processes, we gain insight into how we navigate and make sense of the rich soundscape of everyday life.

Conclusion

These models of hearing provide valuable insights into the complex mechanisms of auditory perception. While each offers a different perspective, their variety also highlights the fact that truly understanding how such complex physiologiacal processes really work is a long journey of formulating and testing hypothesis. As research in auditory neuroscience continues to evolve, these models will likely be refined and integrated, bringing us closer to a more complete understanding—while also reminding us that the nature of perception remains, in many ways, an open question.

Check our other articles

Music of the Spheres and Songs of the Earth

The Music of the Spheres is an ancient philosophical concept that envisions the cosmos as a vast, geometrical system of harmonic proportions, where celestial bodies move according to mathematical principles and generate an inaudible series of tones that together...

What to Expect from a Sound Bath Experience

For most people, the first experience of Sound Therapy (or Sound Healing) is through the kind of group session called a "Sound Bath", a practice that can be deeply relaxing and restoring. After realising the powerful effects that sound can have, many people want to...

Sound Therapy In Neurological Rehabilitation Settings

Having mental health problems is a situation that affects one in seven people around the world, according to official statistics. For centuries, it contributed to poor education and physical health, unemployment, and estranged relationships with family and friends....